Final prep

7 questions

- x86 assembly code

after each line:- determine value of result

- address/register were result is stored (numeric values in case of address)

- Cache organization / Paging / Table organization

Which bits are used to select what (byte indexing, set selection, ...)

Pages on the PU- given page size, size of page descriptor, virtual address size - figure out minimum number of levels in the address table to implement the structure

- What's the maximum number of address bits which could still be covered by that table structure

- Operations of virtual memory

- 4 ! different functions of memory management

- Role of exceptions

- Role of the paging unit

- What is done in hardware/software for virtual memory system

- Describe the register sets / model of condition operations / flags

- Programming model vs. implementation - properties of programming model

What characteristics of the programming model make pipelining possible - Calling convention - function prologue/epilogue

Cache organization

Cache design

Data is stored in big units called lines, x4 size of memory word (32/64 bytes)

LSB of address is used to select part of line

Fully associative cache

Data accessed based on association of data - similar to phone book

Every cell may store data from any address - all cells must be searched during every address

Results in misses if working set exceeds cache size

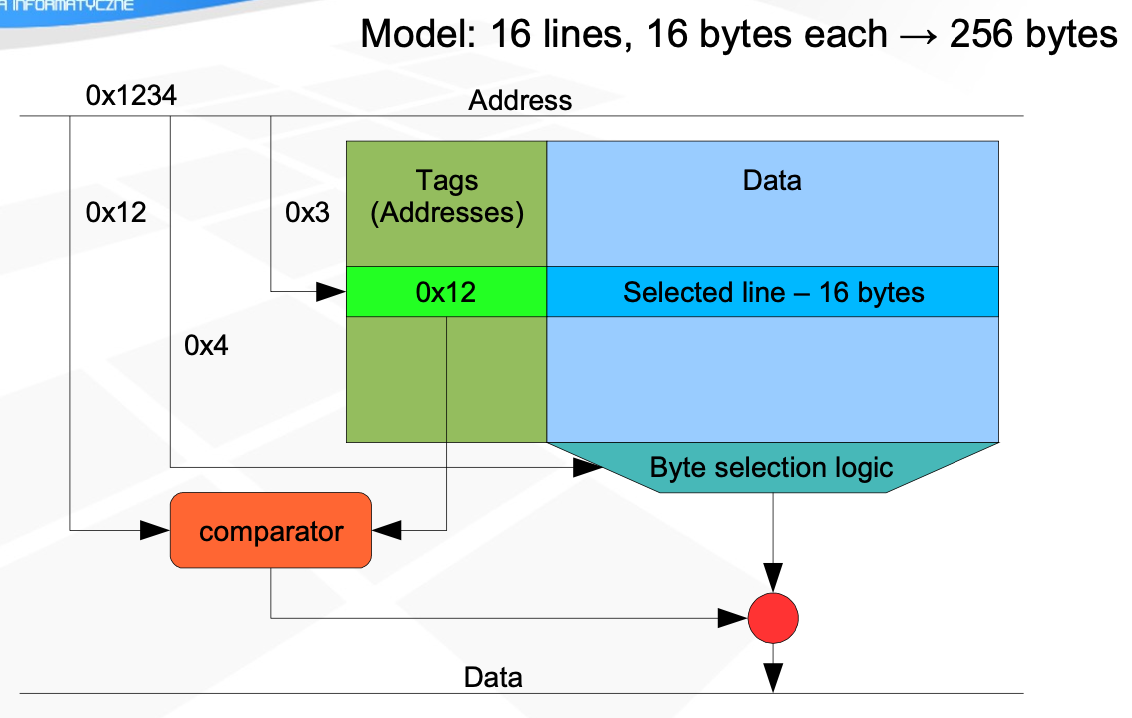

Direct mapped cache

Ordinary RAM, single equality operator

LSB part of address - offset at line

Middle part - address in cache

MSB - address tag, physical address, compared with one obtained from cache

During every access cycle, one line is selected based on middle bits

Cache hit - address tag matches address from cache

Cache miss - selected line is replaces with data fetched from memory

Speeds ups references, if working set is smaller than x2 cache capacity

Data from given address may be stored only in one line, cannot store data from two addresses with identical middle parts

Set associative cache

Several direct-mapped caches, number of possible data locations equal to number of blocks

every cycle searches for a single line of every block - collection of lines - set

such sets behave line a tiny fully-associative cache

Number of blocks - cache associativity - two way / four way

Allows to store data from addresses with equal middle part

Selecting data

24KiB cache, with 32B line size, 4-way associative

Left bits are the MSB of the physical address (compared with one stored in cache)

EXERCISE

System uses - 40bit virtual address, 8KiB page size and Page Table Entry is 4 bytes. What is the minimum number of levels in hierarchical table needed to fit every page?

8KiB page size =

- 13 bits are used as offset in the virtual address

This leavesbytes left for page indexing

entries per page, so 11 bits are used for each level

EXERCISE

System uses - 52bit virtual address, 16KiB page size and 10bit PTE

16KiB page size =

- 14 bits as offset

bits left for level indexing

- 10 bits per level

levels

EXCERCISE

In a virtual memory system, size of virtual address is 32-bit, size of physical address is 30-bit, page size is 4 Kbyte and size of each page table entry is 32-bit. The main memory is byte addressable. Which one of the following is the maximum number of bits that can be used for storing protection and other information in each page table entry?

4KB page -

- 12 bits of offset 20 bits left for level indexing

Physical address is 30 bits - sum of PFN and offset, so PFN takesbits

PTE contains PFN and additional information, which takes 32 - 18 = 14 bits.

EXCERCISE

Consider a system with byte-addressable memory, 32 bit logical addresses, 4 kilobyte page size and page table entries of 4 bytes each. The size of the page table in the system in megabytes is

Total addressable space -

bytes

Single page addressable space -bytes

Number of pages needed

With each page having 4 bytes per PTE -of space

Operations of virtual memory

The memory visible in an application programming model (by software) is virtual memory layer of memory hierarchy - not physical

Virtualization allows the process to see the same amount of memory available, regardless of the actual memory size and memory utilization by other processes.

The virtualization is managed by Memory Management Unit, which translates the logical address provided by the processor (from process' virtual memory) into a physical address.

Most basic way of doing so is called simple relocation and bases on saving process' base address and adding it to the logical one. This is simple, fast but causes memory fragmentation due to allocating and deallocating space for the processes memory space.

Second operation is segmentation which divides the process' memory space into segments - most commonly 4 - text (code), static data, stack and heap. Every of the segments has their own segment identifier, and every data in them is accessed using intrasegment address, which is added into the base linear address, obtained from segment descriptor (to which the segment identifier points to). This calculating is done in the segmentation unit. The segment descriptor contains:

- validity tag - is the data in cache valid

- access rights - compared with access attributes from the logical address

- segment size

- linear base address

Virtual memory can be created based on the segmentation, by dividing the address space into many fragments. Usually it is - 1+ code, 1+ heap, 1 static data and 1 stack. The division of heap and code into segments creates much more difficult pointer arithmetic, and creates fragmentation which can be very time consuming.

Last alternative is paging which divides the logical and physical memory into small, size-aligned blocks of size. Blocks in virtual memory are called pages, and in physical - frames. The paging unit (part of MMU) is responsible for translating the virtual address into the physical one. It is done based on the associating a virtual page number with physical page number, additionally with offset. The Translation Buffer (actual hardware structure doing the searching and calculations in PU), takes virtual address containing Virtual Page Number and Offset. Based on the VPN, the TLB finds the corresponding PPN in the cache. The TLB searches through pages, which have tree-of-tables structure, one entry in the page leading to a whole another page, due to high number of memory references needed in modern computers.

Paging is not visible in the application programming model, which makes it perfect for virtualization.

4 functions of memory management

1. Hardware address relocation

Ensuring the same layout of logical address space for all processes, including multiple processes of the same program (binary image)

2. Protection

Not allowing processes to access data not explicitly allocated/allowed for them to access. Ensuring correct accesses to the addresses

3. Dynamic allocation and reallocation

Shrinking and enlarging the size of address space allocated to the process

4. Virtualization

Ensuring the process sees the same amount of memory available, regardless of the actual memory size and memory utilization by other processes

Role of exceptions

Exceptions are a way of signaling the processor of important events during a process' execution. We divide exceptions into asynchronous (not caused by the program being executed) - interrupts, and synchronous (result of instruction execution) - traps and errors.

The exceptions can be used as event signals - as in case of interrupts about data transfer finish, key press, mouse move etc, or program errors (faults, aborts) - undefined instruction, misaligned memory access, protection rules violation. The traps are used to suspend program's execution, used for example in debugging (instruction stepping).

Role of paging unit

The paging unit is used to translate a virtual address obtained by the processor from process, into a physical address for the memory. The translation is done by Translation Buffer, which combines offset from the virtual address, and the appropriate physical page number obtained from the page descriptor found by TLB in the page tables.

Using the TLB, the page unit finds the corresponding physical address, checks access rights and validity of the found data in order to then serve back the correct data to the processor.

What is done in hardware/software for virtual memory system

On the hardware level, the modifications depend on the used virtual memory solution. For simple relocation its the addition of relocation unit, for segmentation - segmentation unit and for paging - paging unit, all in Memory Management Unit.

In software level, the segmentation divides process' memory space into segments, which is visible in the application programming model. The introduction of multiprocess processor which requires dividing the segments (code and heap) further into smaller subsegments greatly complicates the pointer arithmetics and requires time consuming defragmention, caused by allocation/deallocation of memory space resulting in fragmentation.

Paging on the other hand is invisible to the application programming model, but requires the introduction and allocation of pages at the OS level, as well as their management.

Describe register sets / model of condition operations / flags

Register sets

- General purpose - temporary data, variables, immediate results

- Special purpose - stack pointer, program counter, etc

- Status register / flags - holds condition flags set by operations

- Instruction register - holds the current instruction being executed

- Index/base register - used for addressing modes

Model of condition operations

Condition operations are based on flag values. Instructions:

JE ...- checks if Z flag is equal to 1, and if it is, jumpsJL ...- checks for mismatch between SF and OF

Flags

- Z (zero) - set if result is equal to zero

- CF (carry flag) - set if unsigned overflow occurs (carry in addition, or borrow in subtraction)

- SF (sign flag) - set if result is negative

- OF (overflow flag) - set if signed overflow occurs (result too large or too small for signed representation)

- PF (parity flag) - set if number of 1 bits is even (historically used for error checking)

Programming model vs. implementation - properties of programming model

what characteristics of the programming model make pipelining possible

For the architecture to be implementable in pipeline processor, it needs to meet specific requirements which form the base of pipeline processor concept. These are:

- Constant instruction length

- Set instruction execution steps, the same for all instructions

- Max one memory reference in a single instruction

- Max one destination register in a single instruction

These requirements ensure that the pipeline can be run without basic delays (we omit the hazards and branch delays in that reasoning), with one instruction being started and one being finished every cycle, constantly.

The RISC architecture is perfect for this reason, as every instruction is executed in the same steps, in the same amount of cycles.

The CISC architecture has many different length instructions, with each of them having different execution order, which makes it impossible to create a 'clean', 1to1 implementation of CISC pipeline.

Calling convention - function prologue and epilogue

In the x86 C calling convention, before the main part of a routine, there a few steps that need to be done to ensure proper, safe code execution.

First of them is saving the initial stack pointer, which can be then used to address local variables and passed arguments. Then, if any callee-saved registers are used such as EBX, they have to be saved (easiest way is to push them onto the stack). This ensures proper stack alignment on the function return.

After the routine, the function epilogue has to be run, which return the most important register values back to their original ones. The values pushed onto the stack during the prologue are now popped back, in the reverse order (due to the stack build and workings), and the return instruction called, to return from the routine.

Last year exam

1. Simple data types - characteristic, binary representation

numeric, no decimal:

- short - 16 bits

- integer - 32 bits, unsigned / signed. If signed - most commonly two's complement

- long - 64 bits

floating point - single precision 32 bits, double precision 64 bits. Typical for 32 bits - 1 sign bit, 8 exponent bits, 23 mantissa bits

character: - ASCII - 7 bits, size-aligned so

sizeof()shows 8 bits - UNICODE - 1-4 bytes, depending on the encoding

boolean - logically 1 bit, stored as 8 bits for size alignment

2. Describe features of RISC architecture which make it possible to implement a processor as pipeline

Main features of RISC that allows to implement a pipeline processor:

- Constant instruction length, does not vary as in CISC for example

- Set instruction execution order

- Max one memory access per instruction

- Instructions operate on set, known data lengths

- Only one destination argument per instruction

3. Methods of interacting with I/O devices

There are three main methods of communicating with peripheral devices

- Polling - infinite loop in the executing code. Doesn't require any hardware additions, but takes processor time and is only suitable for single-process execution units.

- Interrupts - peripheral announces its ready state to the processor via an interrupt. Exception service instruction then proceeds to receive the data. If the processor is not ready to receive the data, the process is suspended.

Very small hardware overhead, mainly software implementation, but causes lots of switching between 'normal' processes and exception service, which causes delays. - Direct Memory Access (DMA) - additional component of the computer which goal is to communicate with the peripheral and exchange data. The DMA receives a whole line of data from the device, directly placing it into memory (without the interference of execution unit) and signals the exec unit about the received data via raising an exception. This greatly reduces number of exceptions a execution unit must serve, compared to the previous method.

Requires additional hardware, but completely disconnects execution unit from transfer control.

4. Addressing modes

There are multiple addressing modes:

- Register direct -

mov eax, ebx - Register immediate -

mov eax, 123 - Register indirect -

mov eax, [ebx] - Register indirect with displacement -

mov eax, [ebx + 2] - Double register indirect -

mov eax, [ebx + ecx] - Absolute direct -

mov eax, [memVal]

For the simplest architecture and basic HLL support, only three are needed - register direct, register immediate and one of register indirects (usually with displacement)

5. Hardware address relocation (definition, purpose, implementation)

Hardware address relocation is a process of implementing an software-based virtual address which is the translated into a physical address, used in physical memory. It allows the program to run without the need for manual, per-program memory management, as each program can run in its own, virtual memory which is then translated onto the physical address space.

It's purpose is to simplify memory management on the user process level, allow multiple processes to run at the same time and memory protection between processes.

The translation of the virtual address into the physical one happens by adding the internal, virtual address to the base program address saved in the relocation/base register. By this operation, the execution unit is able to calculate the proper physical address of the memory needed.

6. Interrupts

Interrupts are a type of exception, which can get thrown both by software and hardware. If the interrupt is thrown at the processor level, its priority level is usually low and the service is handled between instructions, but if it is thrown at the OS level, its priority is usually the highest and must be services as fast as possible, due to the risk of it being lost. They are serviced by Interrupt Service Routine.

Examples of interrupts are - key press, mouse move, disk data transfer finish interrupt, network packet packet received.

During Interrupt Service Routine, the interrupt priority level is saved in the system status register, and no other lower interrupt may interfere with the service routine, only higher-level ones.

During the interrupt service routine, current processor context is saved (in architectures with stack - onto the stack, without - context save register), and restored after the service is ended.

7. Following x86 assembly routine

push ebp

mov ebp, esp

mov ebx, [ebp + 8]

xor eax, eax

oclop:

adc, al 0

shr ebx, 1

jz fin

jmp oclop

fin:

adc, al 0

pop ebp

ret

a) The possible C language declaration/prototype of this module

unsigned int ocnt(unsigned int val);

b) The purpose of this routine (the return value) is:

a number of 1's in the input binary representation (bit count)

c) The unconditional jump may be removed by the following modification:

; original

;jz fin

;jmp oclop

; new

jnz oclop

d) The routine violates the x86 C calling convention because

it uses but does not save EBX register, which is a callee-saved register.

e) To make it compliant with x86 C calling convention, it should be modified by:

pushing EBX onto the stack right after the function prologue and popping it before the epilogue

; prologue

push ebp

mov ebp, esp

push ebx

mov ebx, [ebp + 8]

;...

; epilogue

pop ebx

pop ebp

ret